In today’s data-driven world, businesses rely on vast amounts of information to make real-time decisions, optimize operations, and gain competitive insights. But before data can be analyzed, it must first be collected and centralized—this is where data ingestion comes in.

What is Data Ingestion?

Data ingestion is the process of collecting raw data from various sources—such as databases, APIs, IoT devices, and logs—and loading it into a storage or processing system like a data warehouse, data lake, or lakehouse. This foundational step ensures that data is available, fresh, and ready for transformation and analysis.

Why Data Ingestion Matters

- Powers real-time and batch analytics – Enables businesses to react instantly or process large datasets on a schedule.

- Ensures data availability – Keeps systems updated with the latest information.

- Supports decision-making at scale – Handles massive, diverse datasets from multiple sources.

- Integrates with modern data architectures – Works seamlessly with cloud platforms, edge computing, and big data ecosystems.

When & Where Does Data Ingestion Happen?

When:

- Real-time (streaming): Continuously processes live data (e.g., stock market feeds, IoT sensors).

- Scheduled (batch): Processes data in chunks at set intervals (e.g., daily sales reports).

Where:

- On-premises servers – Traditional data centers.

- Cloud platforms – AWS, Azure, Google Cloud.

- Edge devices – IoT and mobile data collection.

- Big data platforms – Hadoop, Spark, and other distributed systems.

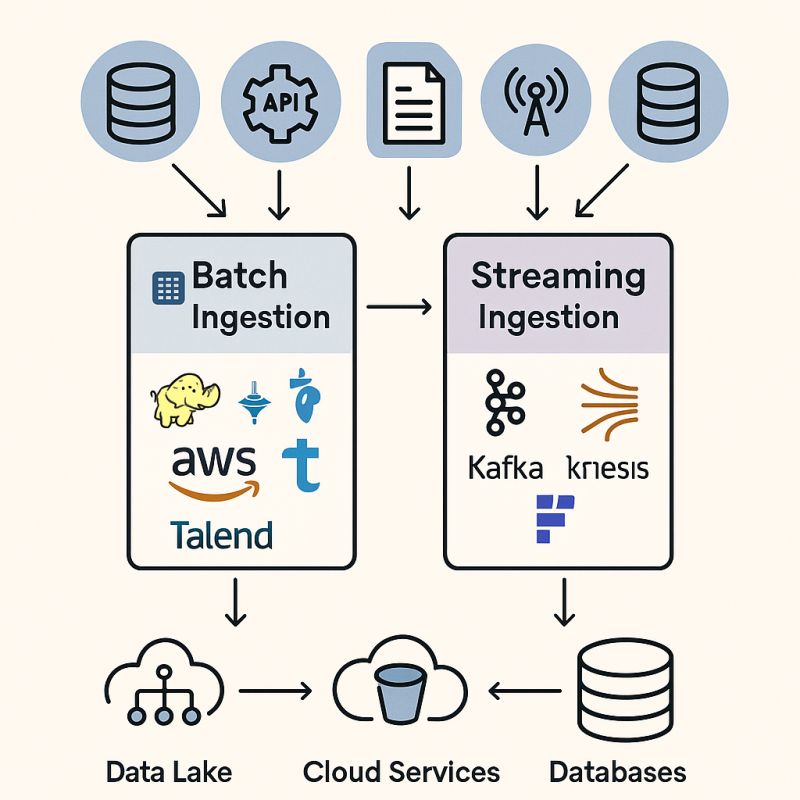

Types of Data Ingestion

- Batch Ingestion

- Processes large volumes of data at scheduled times.

- Example: Daily log files, end-of-day transaction reports.

- Streaming Ingestion

- Handles real-time data flows.

- Example: Live user clicks, sensor telemetry, social media feeds.

Popular Data Ingestion Tools

Batch Processing:

- Apache Sqoop

- Talend

- Informatica

Streaming Processing:

- Apache Kafka

- AWS Kinesis

- Google Pub/Sub

Cloud-Based Services:

- AWS Glue

- Azure Data Factory

- Google Cloud Dataflow

Data Integration Tools:

- Apache NiFi

- StreamSets

Real-World Use Cases

- 📈 E-commerce: Tracking customer orders in real time for inventory and delivery updates.

- 💹 Finance: Ingesting stock market data for algorithmic trading.

- 🏥 Healthcare: Aggregating patient records for predictive diagnostics.

- 📲 IoT: Streaming sensor data for predictive maintenance in manufacturing.

- 🗣️ Social Media: Feeding tweets into sentiment analysis models for brand monitoring.

Final Thoughts

Data ingestion is the backbone of any modern data pipeline, ensuring that raw data flows efficiently into systems where it can be transformed, analyzed, and turned into actionable insights. Whether you’re handling streaming data or batch processing, choosing the right ingestion strategy and tools is crucial for scalability, performance, and real-time decision-making.